Create a Logical Data Model from CSV Files

For workspace administrators only

This tutorial guides you through the process of creating a logical data model (LDM) in your workspace from CSV files. A newly created workspace does not have an LDM therefore you are going to create an LDM from scratch.

For the purpose of this tutorial, you are going to create a simple LDM with three datasets and publish it to your workspace.

You create an LDM in the LDM Modeler. To do so, perform the following steps:

- Prepare CSV files.

- Add a dataset with attributes.

- Create a dataset with facts and a Date dataset.

- Publish the LDM.

If you do not have CSV files, you can create an LDM:

- Manually (see Create a Logical Data Model Manually)

- From your cloud data warehouse (see Create a Logical Data Model from Your Cloud Data Warehouse)

- You cloud object storage service (see Create a Logical Data Model from Your Cloud Object Storage Service)

- From the Output Stage if you use it (see Create a Logical Data Model from the Output Stage)

When you are working on your LDM:

- The changes are automatically saved as a draft as you are making them. The draft is saved under your GoodData user, on the machine and in the browser it was created, and you can continue editing it whenever you are ready. When the LDM Modeler saves your draft, it displays a message and the time the draft was last saved. The draft is kept until you either publish the changes to the workspace or manually discard the draft (in this case, the last published version of the LDM is loaded in the LDM Modeler).

- The LDM is validated as you are making changes. You are warned you if there is an issue (for example, an empty dataset or a dataset with a missing title).

Prepare CSV Files

Make sure that your CSV files meet the requirements described in CSV File Requirements.

In this tutorial, you are going to use two CSV files, product.csv and order_line.csv. Download them to your machine.

product.csv(download as .CSV)product_id,product_name,category 150,Skirt,Clothing 210,Artego,Electronics 240,PortaCode,Electronics 420,Elentrix,Outdoor 430,Integres,Outdoor 120,"Polo Shirt",Clothing 110,Pullover,Clothing 310,Applica,Home 330,Optique,Homeorder_line.csv(download as .CSV)order_line_id,order_id,order_status,date,product_id,price,quantity 10668-9VYN74-2,10668-9VYN74,Cancelled,2016-07-12,210,100.33,1.00 10697-7GBN87-1,10697-7GBN87,Delivered,2017-12-05,310,39.35,1.00 10907-0TPH53-3,10907-0TPH53,Delivered,2015-03-28,220,50.40,1.00 10778-7INQ32-1,10778-7INQ32,Delivered,2018-02-08,230,78.40,1.00 10240-3SBQ40-3,10240-3SBQ40,Delivered,2017-05-07,160,21.49,1.00 10356-7ZBU60-1,10356-7ZBU60,Cancelled,2015-03-01,140,21.49,1.00 10700-0ACT49-1,10700-0ACT49,Returned,2019-02-20,150,21.82,1.00 10175-0YRN35-3,10175-0YRN35,Delivered,2015-04-11,150,19.15,3.00 10152-6HOB25-1,10152-6HOB25,Cancelled,2016-03-04,140,29.67,1.00

Add a Dataset with Attributes

You are going to create your first dataset from the product.csv file. This CSV file contains only attributes. Attributes are data that is to be used in grouping or segmenting the values resulting from the computed functions (for example, countries, names, IDs). In almost all use cases, this data is non-numerical. For more information about the attributes, see Attributes in Logical Data Models.

You are also going to set one of the attributes in the dataset as a primary key. The primary key defines the unique identifier for a row of data in a file and serves as a connection point that allows you to connect this dataset to another dataset. In other words, you will be able to create a relationship between the dataset that you are going to create from the product.csv file and another dataset that you will be creating later in this tutorial. A relationship between two datasets allows you to use information from one dataset to slice the data in the other dataset. The dataset with the data that you want to use to help analyze the other data is the one that must have a primary key. In this tutorial, this dataset is the one created from the product.csv file. For more information about primary keys, see Primary and Foreign Keys and Connection Points in Logical Data Models.

Steps:

On the top navigation bar, select Data. The LDM Modeler opens. You see a blank canvas area in view mode.

Click Edit. The LDM Modeler is switched to edit mode.

Drag the

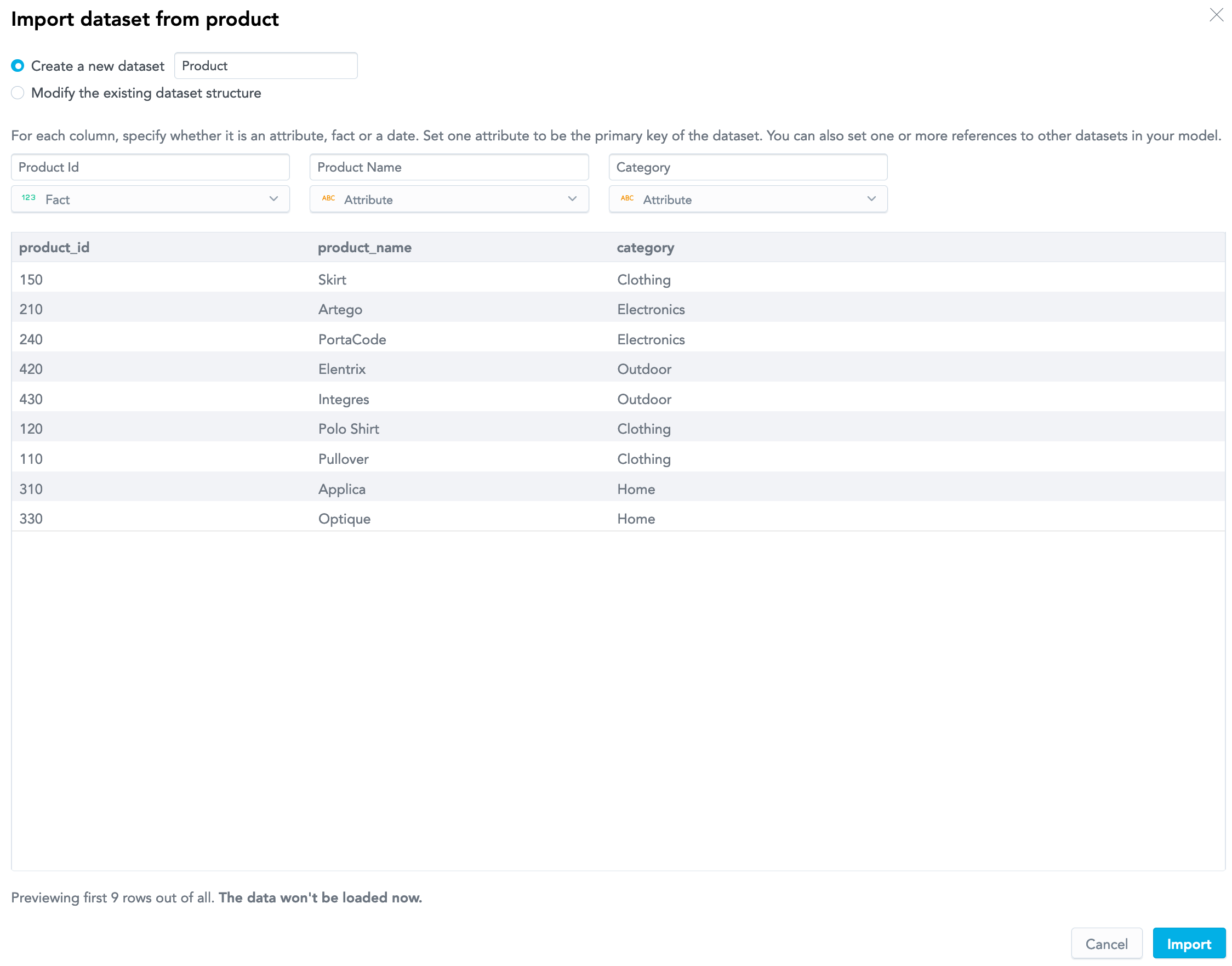

This preview shows the data from your file. Based on the column names and the contents of those columns in the CSV file, the column names and the types of the data are suggested for the dataset that will be created from this file. For example, theproduct.csvfile and drop it in the blank canvas area. The file preview opens.product_namecolumn is detected as an attribute based on the values in the column (non-numerical data) and will be namedProduct Namein the dataset.Notice that the option for creating a new dataset from the CSV file is selected, and the name for the new dataset is predefined as

Productbased on the name of the CSV file. Keep this option selected and leave the name as suggested.Notice that the

product_idcolumn is detected as a fact, which is a numerical piece of data, which in a business environment is used to measure a business process (see Facts in Logical Data Models). This is because it contains numbers. By default, numbers are auto-detected to be facts. However, theproduct_idcolumn contains the identifiers of the products. Those are non-measurable descriptive data, and therefore must be an attribute. Because the product IDs are unique, you can use it as a primary key.Change the data type for the

product_idcolumn from Fact to Primary key. If you do not set the primary key now, you will be able to do it after the dataset has been created (see Update a Logical Data Model).Review the suggested column names and the data types for the other columns. These columns are correctly detected to be attributes.

Click Import. The CSV file is imported, and the

Productdataset is added.

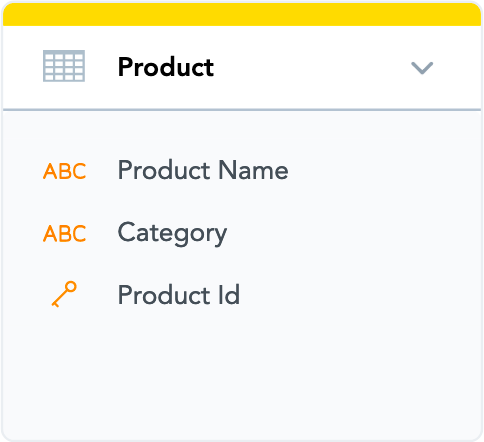

Notice the following:

- The dataset is marked with orange. This is because the dataset contains only attributes and no facts.

- The

Product Idattribute is marked as the primary key. This means that you will be able to create a relationship between this dataset and other datasets in the logical data model.

You are now going to add another dataset from the

order_line.csvfile.

Create a Dataset with Facts and a Date Dataset

You are going to create another dataset from the order_line.csv file. This CSV file contains attributes, facts, and dates.

In an LDM, dates are managed through a separate object, the Date dataset (see Dates in the Logical Data Model). Therefore, after you have imported the order_line.csv file, not one but two datasets will be added to your LDM: one Date dataset for the dates, and the other one with the rest of information. These two datasets will be automatically connected with a relationship.

You are also going to establish a relationship between the dataset created from the order_line.csv file and the Product dataset that you created earlier.

Steps:

- Drag the

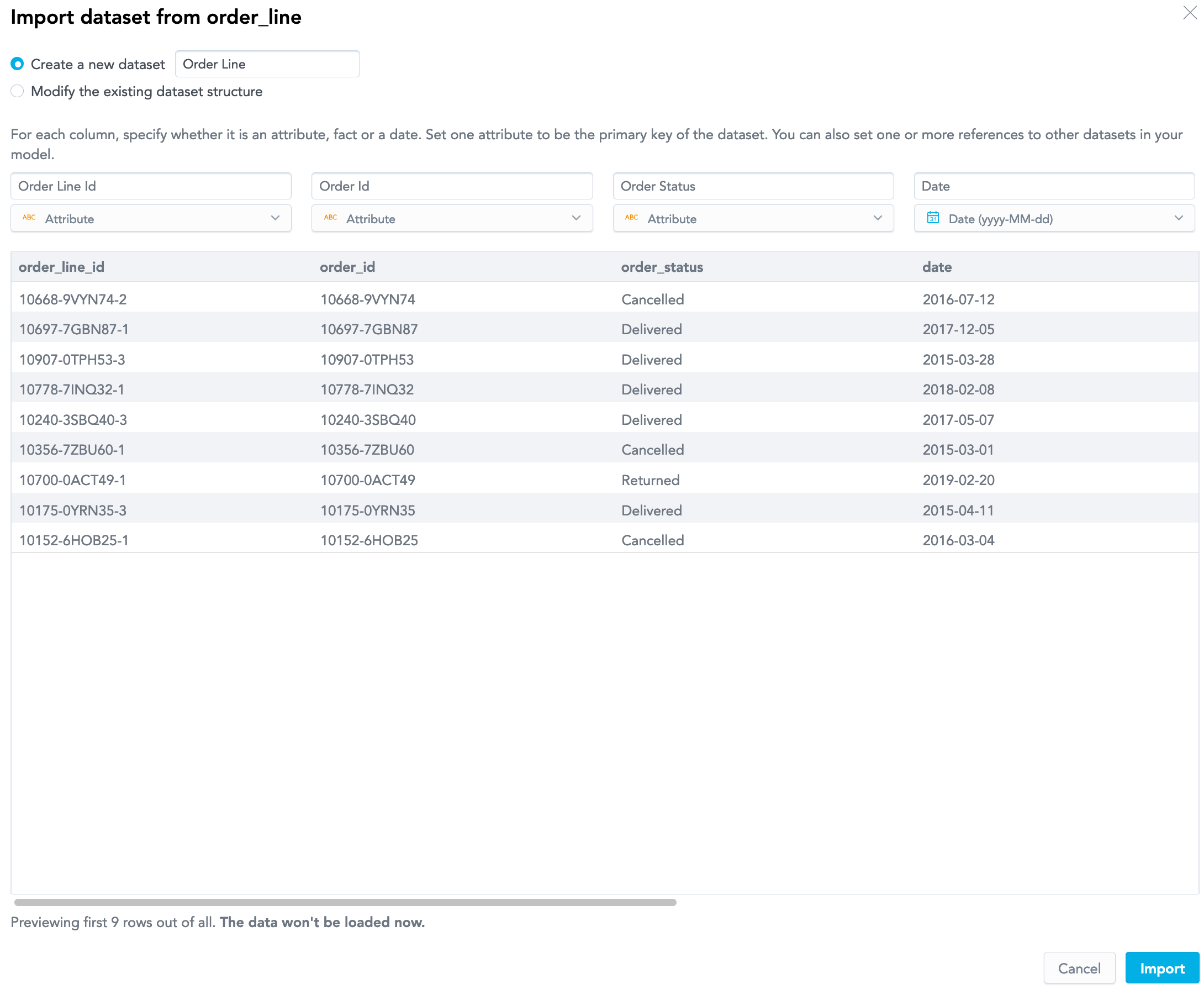

order_line.csvfile and drop it in the canvas area. The file preview opens. Notice the following:- The

order_line_id,order_id, andorder_statuscolumns are correctly detected as attributes. - The

datecolumn is correctly detected as dates in theyyyy-MM-ddformat and will be converted to a separate Date dataset. - The

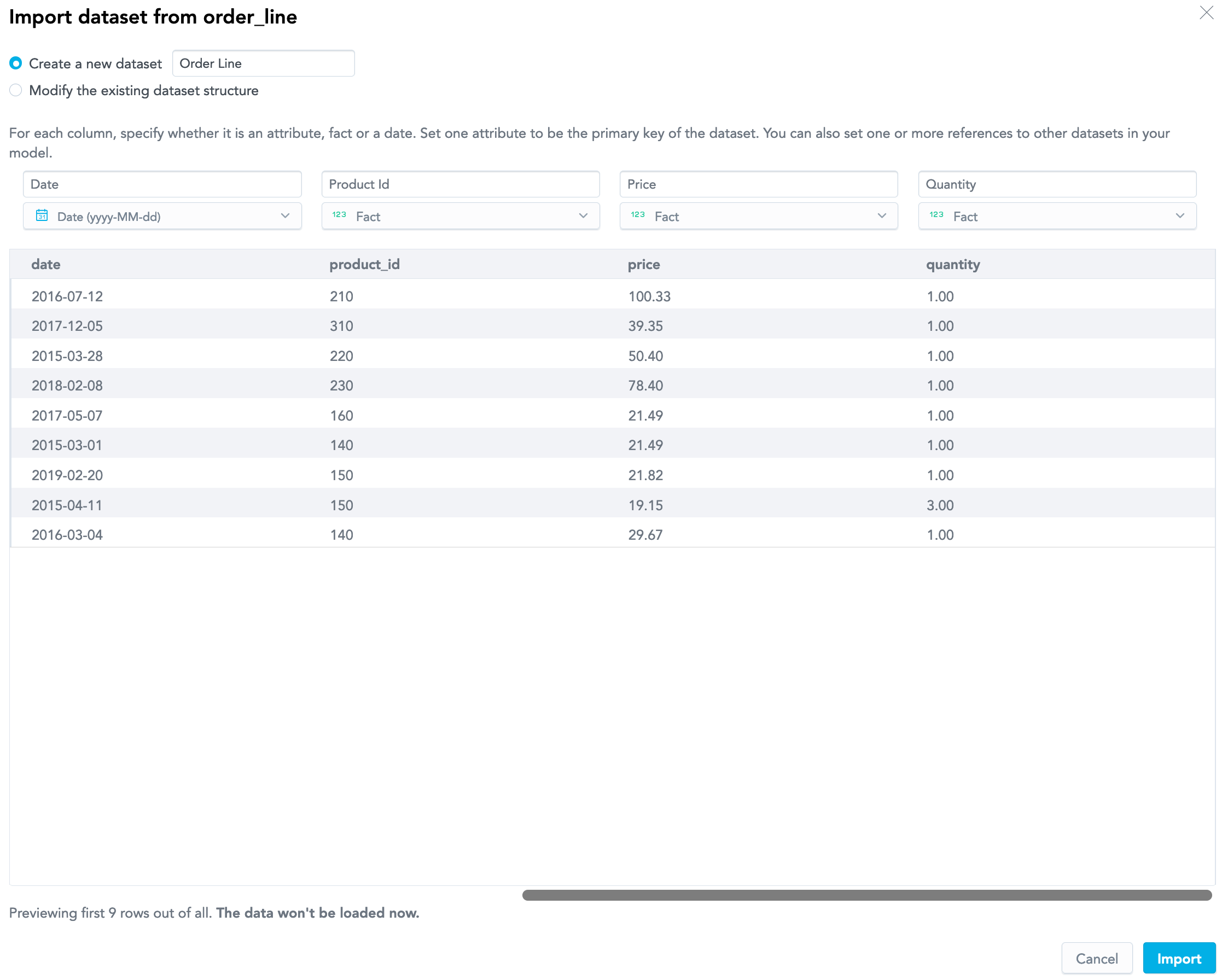

priceandquantitycolumns are correctly detected as facts.

- The

- Notice that the option for creating a new dataset from the CSV file is selected, and the name for the new dataset is predefined as

Order Linebased on the name of the CSV file. Keep this option selected and leave the name as suggested. - Notice that the

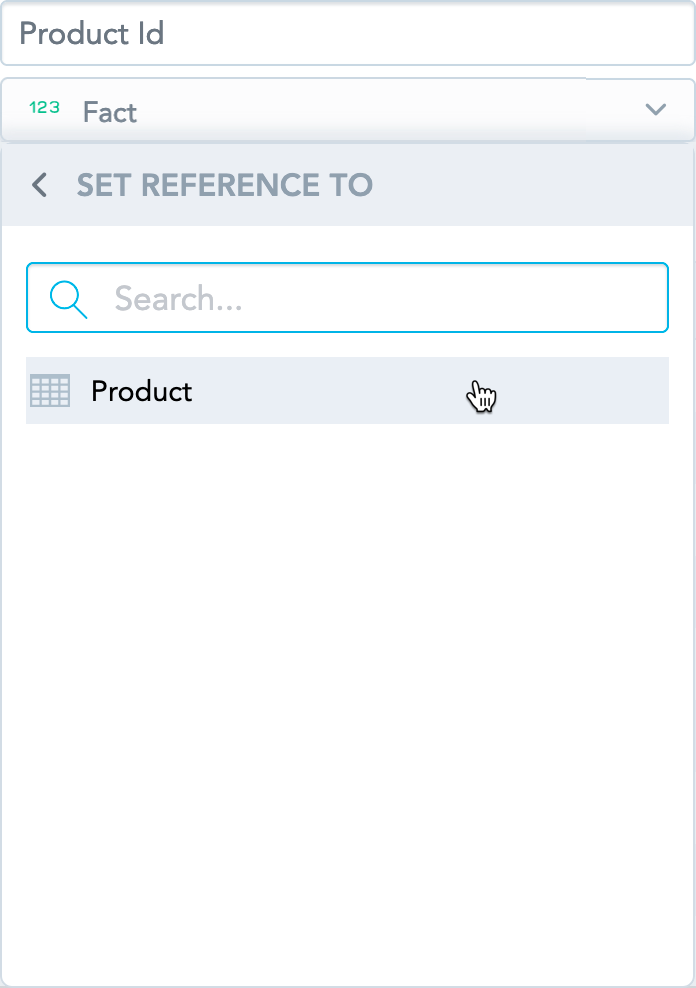

product_idcolumn is detected as a fact. This is because it contains numbers. However, this column contains the product identifiers and therefore must be an attribute. Earlier in this tutorial, when creating theProductdataset, you set theProduct Idattribute to be the primary key of this dataset. Now, you can set theproduct_idcolumn in theorder_line.csvfile as a reference and thus create a relationship between these two datasets. - Change the data type for the

product_idcolumn from Fact to Reference. When prompted, select Product as the dataset to set the reference to.

- Click Import. The CSV file is imported, and the

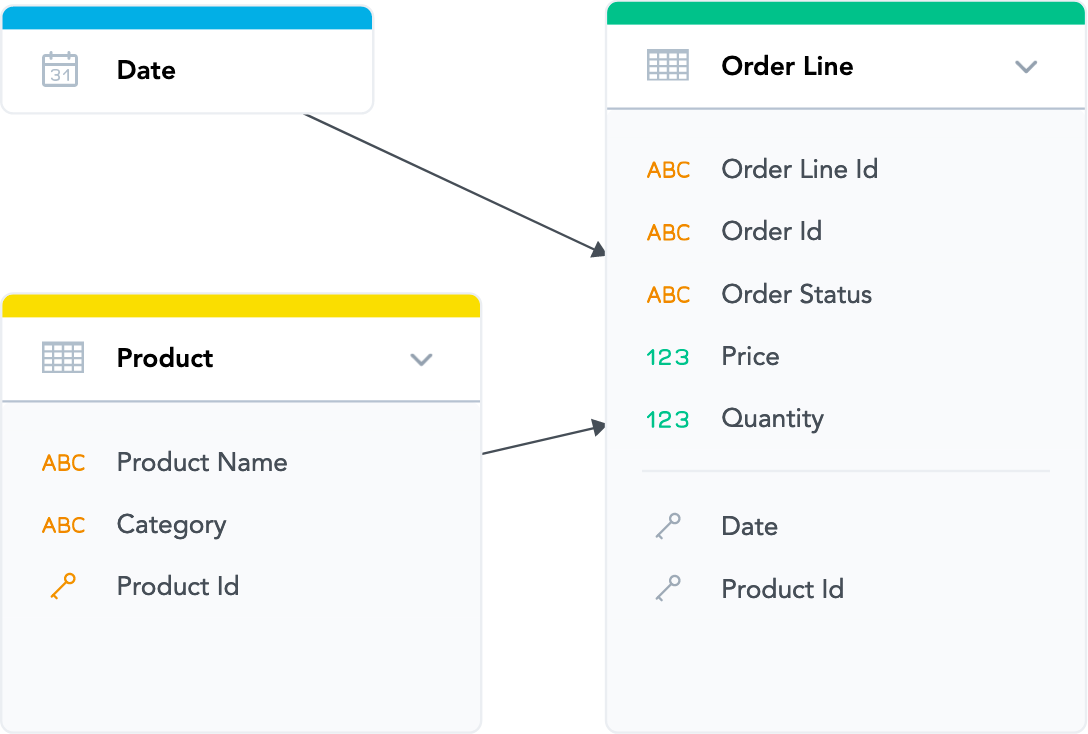

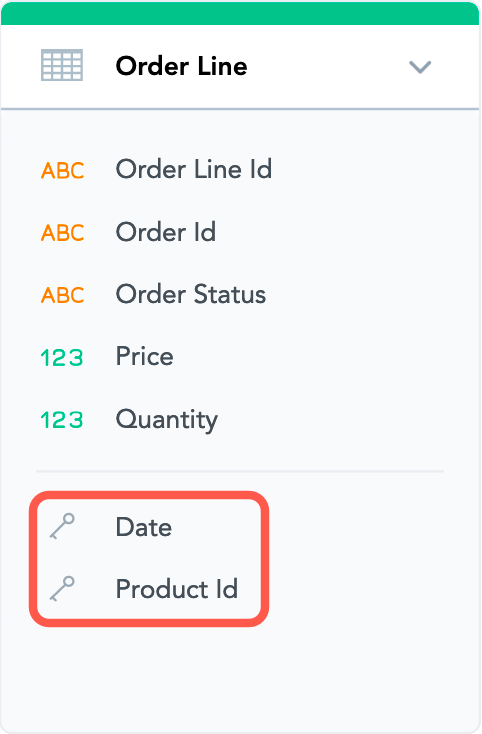

Order Linedataset and theDatedataset are added. Notice the following:- The

Order Linedataset is marked with green. This is because the dataset contains facts. - The

Datedataset is marked with blue. This is because this is a date dimension dataset. - A relationship is created between the

Datedataset and theOrder Linedataset. This is because the dates were extracted from theorder_line.csvfile. The direction of the arrow determines which dataset’s data can be used to analyze (slice) the data from the other dataset. The relationship between theDatedataset and theOrder Linedataset allows you to slice the data from theOrder Linedataset (an incoming relationship to theOrder Linedataset) by date (an outgoing relationship from theDatedataset). - A relationship is created between the

Productand theOrder Linedataset. This is because you connected these datasets by theProduct Idprimary key in theProductdataset. The direction of the arrow determines which dataset’s data can be used to analyze (slice) the data from the other dataset. The relationship between theProductdataset and theOrder Linedataset allows you to slice the data from theOrder Linedataset (an incoming relationship to theOrder Linedataset) by product (an outgoing relationship from theProductdataset). - References to the

Product Idattribute and theDatedataset have been added to theOrder Linedataset. These references indicate that theProduct Idfield and theDatedataset are foreign keys in theOrder Linedataset.

- The

Your LDM is ready. You can now publish it.

Publish the LDM

To publish the LDM, follow the instructions from Publish a Logical Data Model.

Mapping the Datasets to Source Tables

Any dataset in your LDM must have each field (a fact or an attribute) unambiguously mapped to a column in a source table (represented by a table in your data warehouse or a CSV file). The dataset itself must be mapped to this source table.

During data load, the data from the columns in the source table will be loaded to the corresponding facts or attributes in the dataset.

In a dataset that was created from a CSV file, the dataset fields are mapped to the columns in that CSV file. You can use CSV files with the same column names to load data to the dataset. For more information, see Update Data in a Dataset from a CSV File.

If you want to switch to loading data from a table in the data warehouse and this table has the same name and the column names as the CSV file, you can keep the mapping as is. If the table has a different name and/or column names, manually update the dataset so that its fields are mapped to the table columns and the dataset has the table name mapped to it. For more information, see Mapping between a Logical Data Model and the Data Source.

Always keep the LDM synchronized with the source of the data. Whenever you change the source of the data, update the LDM accordingly.

For example, if you add a column to a source table, add a corresponding field (attribute or fact) to the dataset mapped to this table, and then map this field to the table column. Otherwise, you will not be able to load data from this column to your workspaces.

Similarly, if you delete a column from a source table or delete a whole table, delete the corresponding field from the mapped dataset or the mapped dataset itself.

For more information about updating the LDM, see Update a Logical Data Model.