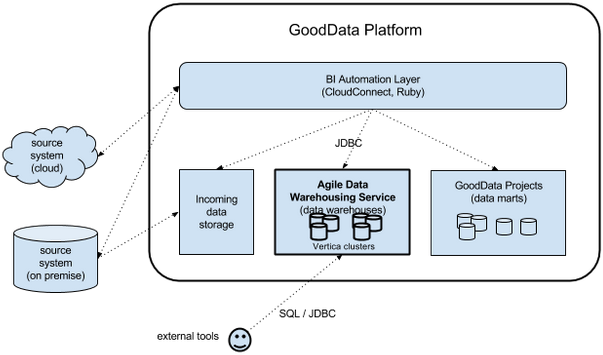

Data Warehouse and the GoodData Platform Data Flow

This article explains the Data Warehouse data flow within the GoodData platform.

The typical data flow is the following:

Source data is uploaded by one of the following ways:

- The data is uploaded to GoodData’s incoming data storage via WebDAV. The BI automation layer, typically custom CloudConnect ETL or Ruby scripts, collects it from the storage.

- The automation layer directly retrieves the data from the source system’s API.

For more information on project-specific storage, see Workspace Specific Data Storage.

ETL graphs are created and published from CloudConnect Designer. For more information on CloudConnect Designer, see the Downloads page at https://secure.gooddata.com/downloads.html.

If you are a white-labeled customer, log in to the Downloads page from your white-labeled domain:https://my.domain.com/downloads.html.Ruby scripts are built and deployed using the GoodData Ruby SDK. See GoodData Automation SDK.

You can access Data Warehouse remotely with SQL via the JDBC driver provided by GoodData. Other JDBC drivers cannot be used with Data Warehouse. For more information, see Download the JDBC Driver.The automation layer imports the data into Data Warehouse and does any necessary in-database processing using SQL. See Querying Data Warehouse.

The data is extracted from Data Warehouse using SQL and imported into a GoodData project. During extraction, any additional transformations are performed in the database using SQL or using CloudConnect Designer before the data is uploaded to the presentation layer. The data is available through the presentation layer for users.