Data Pipeline Blueprints

Data pipeline blueprints describe the data extraction, transformation, and workspace loading options.

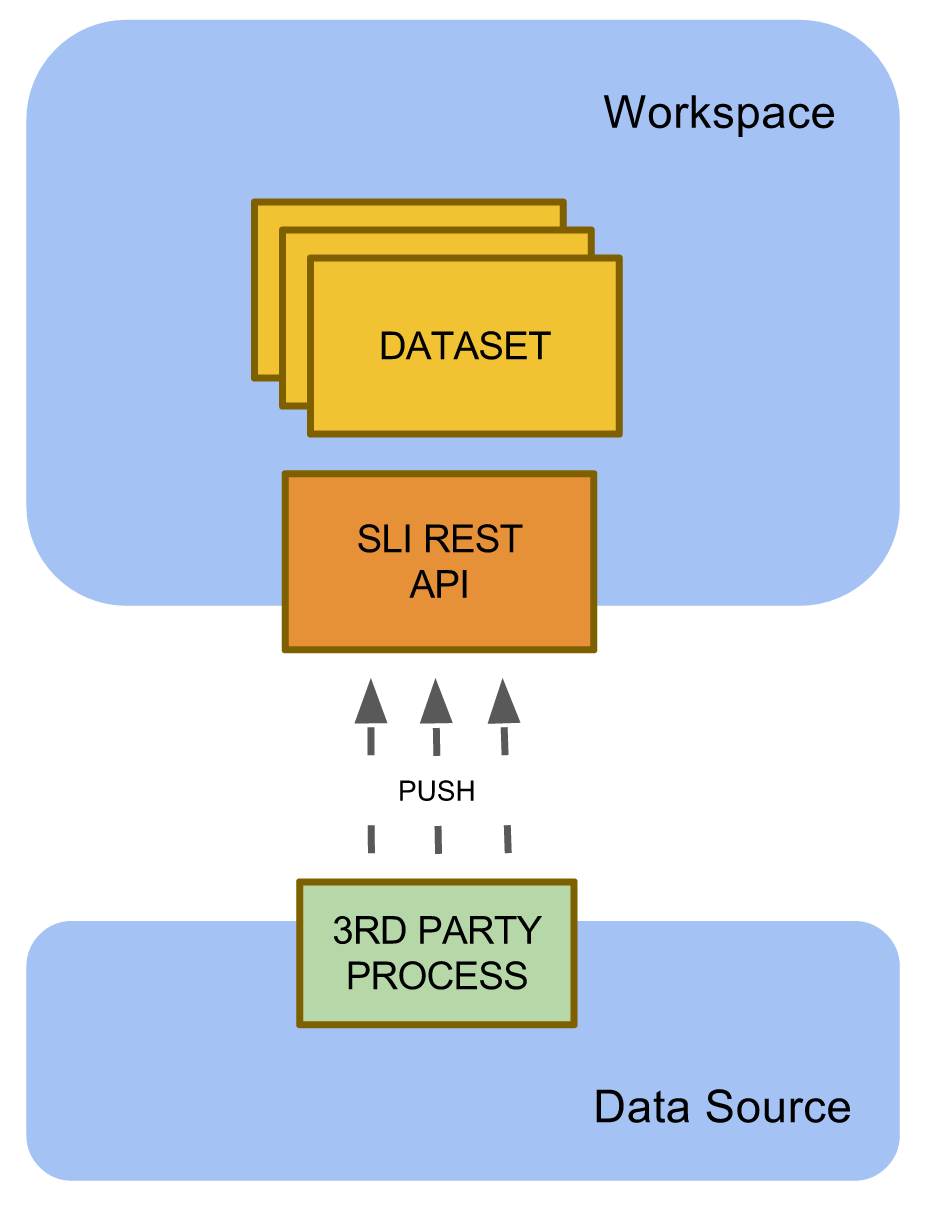

Workspace Data Loading

The workspace data loading blueprint is the simplest one from the GoodData platform footprint standpoint. It relies on a third party process (executed outside of the GoodData platform) that pushes data to one or more GoodData workspaces via the GoodData SLI REST API. For more information, see Loading Data via REST API.

This blueprint is suitable in situations when you have already adopted an ETL infrastructure that you want to integrate with the GoodData platform, for example:

- Informatica

- Keboola Connect

- MS Azure platform with MS SQL transformation services

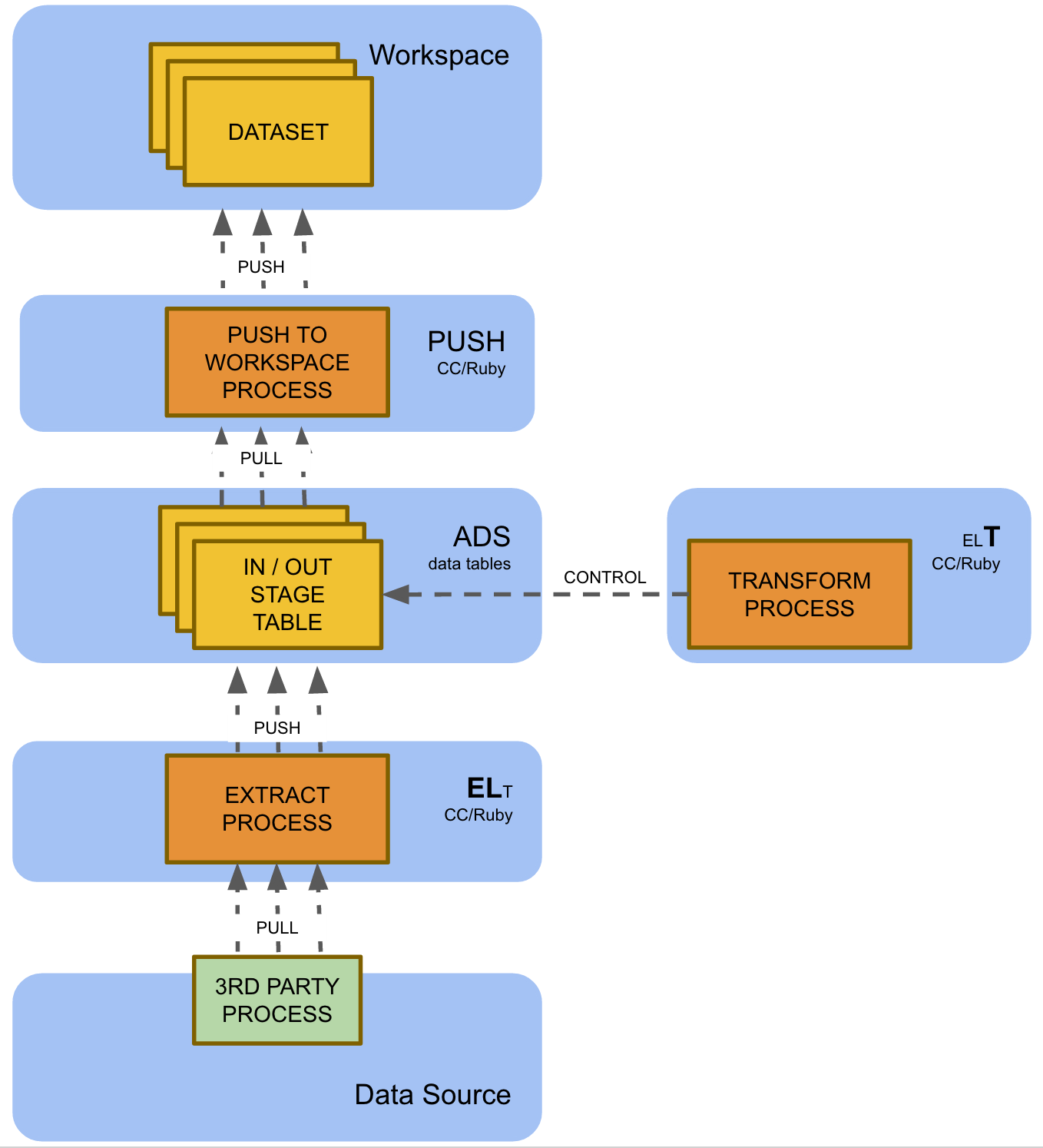

End-to-End ELT

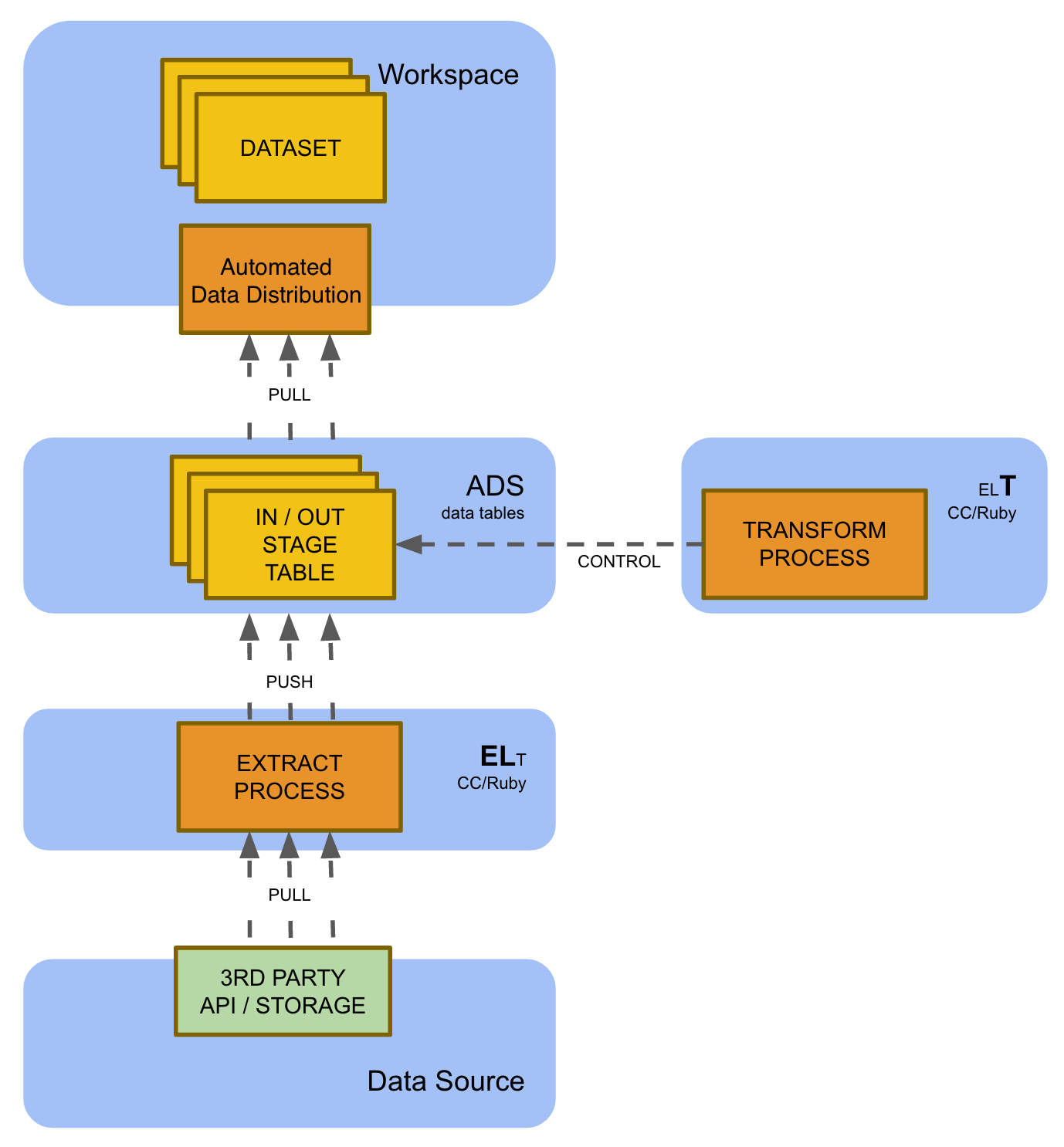

This is currently the most comprehensive GoodData data pipeline blueprint. End-to-End ELT (Extract Load Transform) leverages a custom extraction process (CloudConnect or Ruby) for incremental data extraction, staging data transformation in ADS, and the custom workspace loading process implemented in CloudConnect or Automated Data Distribution (for details, see Automated Data Distribution).

This blueprint is currently used for the largest GoodData multi-tenant solution implementations with hundreds and thousands of tenants. It is the only option in following situations:

- Multi-tenant solution that populates multiple workspaces with data, including:

- PbG (Powered by GoodData) solutions when the data is spread into multiple workspaces (usually a workspace is mapped to an ultimate customer/tenant)

- Corporate solutions that populate multiple workspaces (for example, sales/marketing/support workspaces) with data from a single ADS instance

- Incremental workspace data loading (large data volumes in workspaces)

There is a variant of this blueprint that leverages the Automated Data Distribution for workspace loading. This variant is recommended in situations when the data loaded to the workspace is small enough so it can be loaded in full. This may require a significant data aggregation or filtering (that reduces data volume) in the ADS.